As the rapidly evolving landscape of artificial intelligence continues, our understanding of AI systems' cognitive abilities is constantly being challenged and redefined.

AI people on X/twitter often argue on the topic if a newly released model is "conscious" or "sentient", but there's not a single standard that everyone agreed on about these term. Even philosophers spent decades on this topic without a concludable answer.

In this post, I'd like to introduce an alternate perspective: viewing AI cognitive abilities on a spectrum rather than through a binary lens. Lets breakdown what we think that conclude as important for consciousness and sentient into different cognitive abilities, and everyone can still have their own opinion and standard on what consider conscious or sentient, while we can have a relatively more objective measurements to compare between models.

The Problem with Binary Thinking

In the current era of AI development, we're witnessing the emergence of systems with increasingly sophisticated cognitive abilities. Yet, our discussions often fall into the trap of binary debates: Is an AI system conscious or not? Can it think, or is it just mimicking thought? These yes-or-no questions, while tempting in their simplicity, fail to capture the nuanced reality of AI cognition.

Introducing the Spectrum Approach

Instead of asking whether an AI system has a particular cognitive ability that people think lead to their own standard of "true conscious", what if we asked to what degree it possesses that ability? This shift from a binary to a spectrum view aligns more closely with how we understand the evolution of cognition in nature – as a gradual, multifaceted process.

Consider an AI system that's self-aware of its existence but unable to reflect on its own thoughts. In a binary view, we might dismiss it as non-conscious. But on a spectrum, we could recognize it as having a degree of self-awareness, albeit less developed than human consciousness.

Visualizing the Cognitive Spectrum

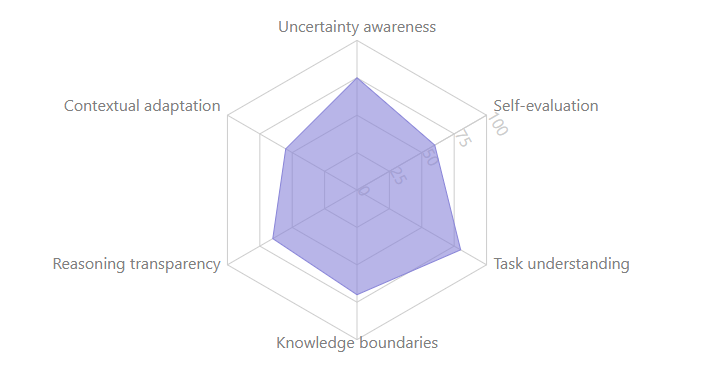

To make this concept more tangible, I propose using star charts to represent different aspects of metacognition. While the exact variables and measurements would require further research and formalization, this approach could provide a valuable tool for comparing different AI models.

Here's an example of what such a star chart might look like:

In this chart, we see various aspects of cognition such as:

- Uncertainty awareness

- Self-evaluation

- Task understanding

- Knowledge boundaries

- Reasoning transparency

- Contextual adaptation

Each spoke of the star represents a spectrum for that particular cognitive ability, allowing us to visualize the strengths and limitations of different AI systems.

Variables and Measurement: A Deeper Dive

It's important to note that the variables shown in our example star chart are just that – examples. They're meant to serve as a starting point for discussion, not as a definitive set of cognitive metrics. The field of AI cognition is complex and multifaceted, and determining the most relevant variables to measure will require extensive research and debate within the AI community.

Some questions we need to consider when defining these variables include:

- What aspects of cognition are most relevant to AI systems?

- How do we ensure our chosen variables are measurable and quantifiable?

- Are there cognitive abilities unique to AI that we should include?

- How do we account for the potential bias in our measurements?

The measurement of these variables presents its own set of challenges. We'll need to develop standardized tests or benchmarks for each cognitive ability we wish to measure. These could involve a combination of task performance, behavioral analysis, and possibly even analysis of the AI's internal processes (to the extent that's possible).

For example, to measure "uncertainty awareness," we might present the AI with a series of increasingly ambiguous tasks and assess its ability to recognize and communicate its level of certainty. For "contextual adaptation," we could evaluate the AI's performance across a wide range of scenarios, noting how well it adjusts its behavior based on changing contexts.

Comparing Multiple Models: Capability Matrices

While star charts are excellent for representing the cognitive abilities of a single AI model, they can become cluttered and difficult to read when comparing multiple models. For these situations, I propose using Capability Matrices.

A Capability Matrix is a table where each row represents a different AI model, and each column represents a cognitive ability. The cells of the matrix could be color-coded or use numerical values to indicate the level of capability for each model in each cognitive area.

Here's a simplified example of what a Capability Matrix might look like:

| Model | Uncertainty Awareness | Self-Evaluation | Task Understanding | Knowledge Boundaries | Reasoning Transparency | Contextual Adaptation |

|---|---|---|---|---|---|---|

| AI-A | High | Medium | High | Medium | Low | High |

| AI-B | Medium | High | Medium | High | Medium | Low |

| AI-C | Low | Low | High | High | High | Medium |

This format allows for quick comparisons across multiple models and cognitive dimensions. It's particularly useful for researchers, developers, and decision-makers who need to assess the relative strengths and weaknesses of different AI systems.

The Benefits of Spectrum Thinking

Adopting a spectrum approach to AI cognition offers several advantages:

- Nuanced Comparisons: It allows for more detailed comparisons between different AI models and systems.

- Development Tracking: We can better track the gradual development of cognitive abilities in AI over time.

- Ethical Considerations: A spectrum view may help inform more nuanced ethical guidelines for AI development and deployment.

- Research Direction: It could highlight specific areas of cognition that need further development in AI systems.

Looking Ahead

As we continue the advancement of AI development, our understanding of machine cognition will undoubtedly evolve. The spectrum approach provides a framework for this evolution, allowing us to move beyond simplistic "can it or can't it" debates to a more nuanced appreciation of AI capabilities.

Of course, this idea raises its own set of questions:

- How do we accurately measure these cognitive abilities in AI systems?

- What are the implications of recognizing degrees of cognition in AI for legal and ethical frameworks?

- How might this change our approach to AI development and the pursuit of artificial general intelligence (AGI)?

These are complex questions without easy answers, but they're the kind of questions that drive discussion and deepen our understanding in the field of AI.

As we stand on the cusp of potentially revolutionary advancements in AI, it's crucial that we refine our tools for understanding and discussing these systems. The spectrum approach to AI cognition is offered here not as a fully developed theory, but as a starting point for new perspectives and discussions in the AI community.

After all, if we're going to build thinking machines, we might as well think deeply about how they think, right?