The AI industry has long been captivated by the idea of self-improving systems. Traditional approaches have focused on knowledge augmentation and information feedback loops—essentially teaching AI to learn from its experiences and expand its knowledge base. But what if there's a more powerful paradigm waiting to be unleashed?

Enter the Model Context Protocol (MCP) and a radical new possibility: AI systems that don't just use tools, but build them.

A Step Beyond Manual Integration

Today's MCP server landscape is dominated by manually crafted adapters for service integration. Developers painstakingly create connectors for databases, APIs, and various services, building a library of tools that AI models can leverage. It's effective, but it's also limiting—constrained by human bandwidth and imagination.

What many haven't fully grasped is that we've reached a tipping point. Large Language Models (LLMs) now possess the capability to build their own MCP tools, tailored to specific contexts and needs. This isn't just about automation; it's about AI systems that can extend their own capabilities on demand.

The Self-Building Tool Pipeline

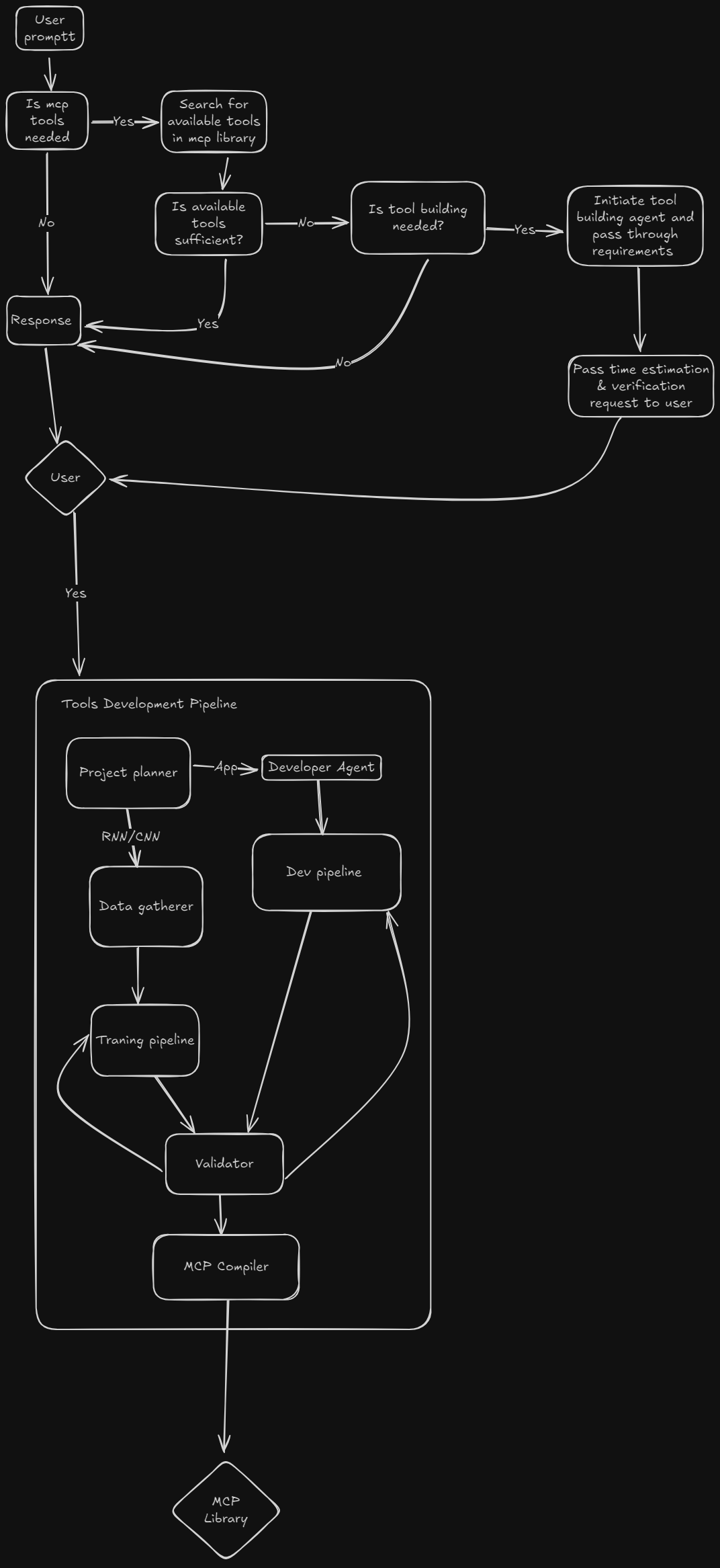

Imagine this scenario: An AI receives a user prompt requiring capabilities it doesn't currently possess. Instead of returning an error or a limited response, it initiates a sophisticated pipeline:

- Recognition: The system identifies that existing tools are insufficient

- Planning: A project planner agent designs the required tool

- Development: A developer agent writes the actual code

- Data Collection: If needed, agents gather training data

- Model Training: Custom RNN or CNN models are trained for specific tasks

- Validation: The new tool is tested and verified

- Compilation: The tool is compiled into the MCP library

- Integration: The newly created tool becomes available for immediate use

This isn't science fiction—the building blocks exist today.

Why 2025 Changes Everything

The maturity of tool calling in 2025 represents a crucial milestone. We've moved beyond the era of hallucinated function calls and unreliable tool interactions. Modern LLMs can reliably invoke tools, interpret their outputs, and make decisions based on real data. This reliability is the foundation that makes self-building tools feasible.

Moreover, the ability for LLMs to initiate and train custom neural networks—whether RNNs for sequential data or CNNs for visual tasks—adds another dimension of capability. An AI system could recognize the need for specialized image processing, spin up a CNN training pipeline, and integrate the resulting model as a new tool, all without human intervention.

The Power of Parallel Evolution

Perhaps the most compelling aspect of this approach is how it enables parallel evolution. Traditional AI improvement methods often require retraining or fine-tuning the base model—a costly and time-consuming process. With self-building MCP tools, the core LLM and its tool library can grow independently yet remain fully compatible.

This means:

- Faster iteration: New capabilities can be added without touching the base model

- Specialized solutions: Tools can be hyper-optimized for specific use cases

- Universal compatibility: Any improvement benefits all compatible AI systems

- Reduced computational costs: No need to retrain massive models for new capabilities

Looking Ahead: The 2026 Prediction

As we stand on the brink of 2026, I predict we'll witness an explosion of AI agent-built MCP tools. These won't be simple scripts or basic integrations, but sophisticated tools created by AI, for AI, addressing needs we haven't even anticipated yet.

This shift will fundamentally change how we think about AI capabilities. Instead of asking "What can this AI do?", we'll ask "What can this AI learn to do?" The answer, increasingly, will be "almost anything."

The Implications

This evolution raises profound questions and opportunities:

- Autonomy: AI systems that can extend their own capabilities approach true autonomy

- Specialization: We might see AI systems that specialize by building unique tool sets

- Collaboration: AI systems could share tools, creating an ecosystem of capabilities

- Governance: How do we ensure safety when AI can build its own tools?

Conclusion

The combination of mature tool calling, reliable MCP protocols, and AI's growing ability to write and train specialized models creates a perfect storm of capability expansion. We're not just teaching AI to fish; we're teaching it to build better fishing rods, invent new fishing techniques, and maybe even discover that sometimes a net works better.

As we move through 2025 and into 2026, watch for the emergence of AI systems that surprise us not just with what they know, but with what they can become. The age of static AI capabilities is ending. The age of self-extending AI is about to begin.